How We Built an Agentic AI Application in 4 Weeks

Accelerating Early Product Development with Hackathons

AITECHNOLOGY

Mark Birch and Luna Luovula

9/22/20254 min read

Why Hackathons Matter for Startups

Startups live and die by speed. The faster you can test, ship, learn, and iterate, the more likely you are to survive. While this speed traditionally came from months of sleepless nights racing toward product-market fit, AI-accelerated development has compressed that timeline from months to a single hackathon sprint.

Hackathons aren't just competitions; they're accelerators in disguise. They provide a way to bootstrap momentum, validate concepts, and secure prize money that extends runway without diluting equity.

This drove our participation in the Google BigQuery AI Hackathon: an opportunity to launch an MVP of our Agentic AI Application under the TribeROI vision of transforming community data into measurable business insights.

With AI-accelerated development, a single sprint delivers:

A working prototype

Demo and distribution assets

Customer and investor credibility

Prize money for extended runway

What started as a hackathon entry became the foundation for something larger.

From Problem to Vision: Communities as Growth Engines

Companies understand community value. Google Developer Group's 400,000+ global developers drive product adoption, innovation, recruiting, and brand awareness. Yet measuring this contribution remains challenging.

Large communities generate thousands of weekly data points across events, content, and member interactions. Manual analysis and static dashboards don't scale, leaving companies unable to connect community activities to business outcomes.

We built the first component of an intelligent AI agent that connects community activities to measurable business results. Our agent functions like a smart teammate: ask it a question and it intelligently queries community data, delivering actionable insights in seconds.

To validate our approach, we tested against one of the world's largest community datasets: the GitHub Archive. If we could derive useful insights from this massive dataset, we know that we would be on the right track.

This aligns closely to TribeROI's mission: transforming fragmented community data into clear ROI signals organizations can trust.

Treating the Hackathon Like a Startup Sprint

We approached this hackathon as a startup sprint, not a weekend project. With four weeks, we had to be deliberate: define the core problem, select the right stack, and build a functional product foundation.

Regular syncs kept us aligned on architecture. As the MVP matured, new ideas emerged, such as evaluation pipelines, additional features, broader data flows, but we scoped ruthlessly, focusing on what was critical: making situational community data usable through a conversational interface.

We balanced polishing core functionality while sketching the broader roadmap. This meant shipping a working end-to-end system while gaining clarity on overall design and product vision.

Most work happened asynchronously with clear ownership divisions. As the deadline approached, we didn't scramble—the final week focused on integration, refining agent interactions, and packaging our repository. Clear communication and lean collaboration made every meeting count.

This rhythm (align, scope, build, refine) kept the demo focused while laying foundation for a comprehensive AI agent application.

Two-Level Product Architecture: Built on Google Cloud

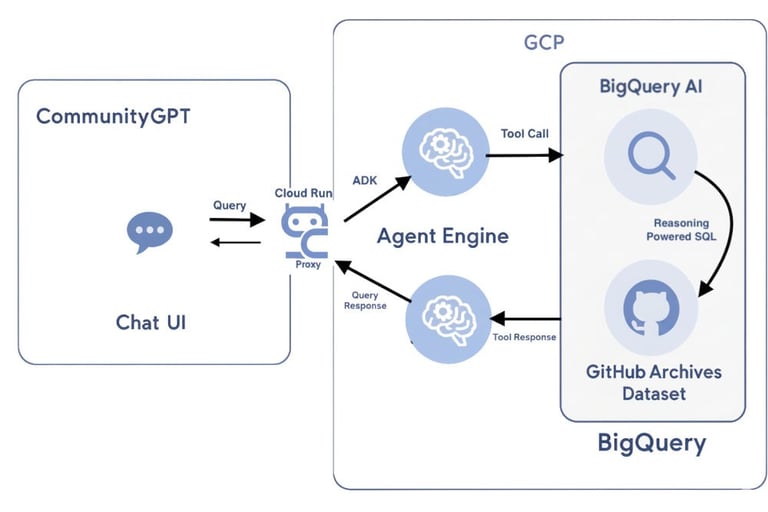

Our core product uses a two-level AI architecture built entirely on Google Cloud. By combining the Vertex AI Agent Development Kit (ADK) with BigQuery AI, we created a zero-infrastructure, cloud-native system designed to scale.

Level 1 — Agent Reasoning: The ADK agent, running on Google's Agent Engine, interprets natural language queries, plans tool calls, manages session memory, reuses engineered tables, applies cost guardrails, and orchestrates multi-step tasks. This is the system's "brain."

Level 2 — AI Execution: When the agent calls BigQuery as a native execute_sql tool, agent-generated SQL runs directly in BigQuery using functions like AI.GENERATE, AI.FORECAST, and AI.GENERATE_BOOL. Computation happens where data resides, ensuring large-scale analysis runs efficiently.

This two-level design (Agent Reasoning + AI Execution) transforms raw cloud data into accessible, scalable, intelligent analytics by achieving three key outcomes:

Natural language → analytics pipeline

Users don't need SQL fluency or data engineering skills. They ask questions in plain English, and the ADK agent plans queries, reuses tables, and orchestrates multi-step tasks, lowering the barrier to insights.AI-augmented SQL at scale

By embedding AI functions directly into SQL, computation happens inside BigQuery where data lives. This enables insights at data warehouse scale from thousands to millions of rows without data movement or additional infrastructure.Cost-controlled, production-ready analytics

Guardrails on query planning and session memory ensure efficiency. The system delivers AI analytics power at cloud scale while maintaining predictable costs, transforming "experimental AI" into production-safe operations.

To validate scalability, we tested our AI agent with the GitHub Archive dataset that captures every public GitHub event. Being able to extract meaningful signals while controlling costs demonstrated the system could handle any community data, from startups to global enterprises.

These layers transform BigQuery AI from isolated functions into a scalable, production-grade conversational analytics platform built entirely on Google's cloud infrastructure. This represents our two-level architecture's promise: enterprise-scale analytics that are conversational, cost-efficient, and production-ready.

Impact: Why This Matters for Founders

Our experience demonstrates how hackathons accelerate product development while leveraging Google's AI stack:

Accelerate development: We built a product foundation in weeks that would otherwise require months.

Bootstrap with prizes: Hackathons provide prize pools that extend runway without equity dilution.

Multiply assets: One sprint generated technical assets (code, architecture, deployment), narrative assets (blog, demo video), and credibility (public visibility + judge validation).

Ride AI's acceleration curve: Google’s cloud infrastructure and AI tooling enables idea → MVP → public launch in a single sprint.

The result is an AI agent that can turn raw GitHub data into categorized, summarized, and forecastable insights in seconds instead of hours. Queries costing $100 as traditional SQL at scale run for pennies with BigQuery AI-powered SQL. Our agent generates and optimizes pipelines from natural language, reducing specialized data engineering needs while accelerating time to value for community owners.

For startups willing to use hackathons as strategic sprints rather than weekend diversions, the opportunity has never been better to accelerate the process from startup idea to MVP to scalable product.

Curious to learn more about what we built and to try it yourself? Check out our demo video and our public GitHub repository.

TribeROI

Unlocking the business impact of communities through data-driven strategies, services, and technology

Contact

© 2025. TribeROI. All rights reserved.

Made with love in Taipei & NYC❤️